前言

很多对LLM感兴趣的同学想下载一些开源模型来玩玩,但是动辄10G起步大小的模型从huggingface下载国内网络,肯定是没办法下载的,在尝试了镜像站之后连续尝试几次每次下到一半就断掉了,又不能断点续传,等同于根本无法下载成功。

这里是下载到带GPU服务器上,如果可以搞代理的话肯定比我这个方法简单,但是由于是学校的服务器不敢在上面开代理,如果不想用代理的可以参考下面的方法。

1.准备

这个方法需要准备的就是一台国外的VPS服务器,因为不需要一直使用,推荐方案是用 vultr (SSD VPS Servers, Cloud Servers and Cloud Hosting),最便宜的是6美元一个月(不过只有20G硬盘可能不够用,建议开一个大一点的),但是可以随开随用按小时计费,因此可以充5美元,然后每次需要的时候deploy一台,用完再destroy,这样5美元可以也用很久。

如果直接就有长期购买的服务器就可以直接忽略上面的方法了。

2.下载

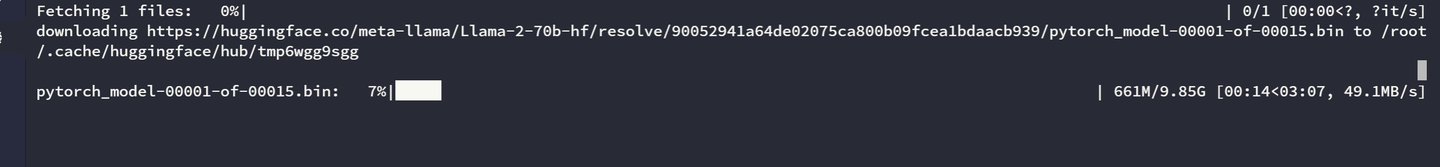

有了国外的服务器网络环境就不是问题了,而且他们的下载带宽通常都很够,下面是我下载的速度:

9.85G的模型只要3分钟就能下下来,速度感人。接下来传到国内的服务器就很简单了,可以用ftp或者http下载了(我是下载nginx然后直接下载到/var/www/html下面然后直接用wget -c http://[yourIp]/[filePath]下载了)。由于上行带宽的限制虽然比不上直接开代理从huggingface直接下,至少比从镜像站稳定很多了不会下到一半断掉什么的。

3.总结

这个方法总的来说就是用自己的一台国外的服务器做中转,多了一步但是国外服务器下载速度实在感人所以也耗不了多少时间。当然这也是我现在想到的相对来说最稳定靠谱的方法了,如果大家有什么更好的方法也欢迎补充。

#2024/05/02更新

还有一个很好的下载脚本安利一下,可以断点续传不用担心下一半就寄了。

先设置环境变量改变hf的镜像端点

export HF_ENDPOINT="https://hf-mirror.com"然后下载aria2c:

sudo apt install aria2c # ubuntu

# or

sudo yum install aria2c # centos下载脚本:

wget https://padeoe.com/file/hfd/hfd.sh如果下载不了的话就就手动复制源代码创建hfd.sh文件再添加执行权限:

#!/usr/bin/env bash

# Color definitions

RED='\033[0;31m'

GREEN='\033[0;32m'

YELLOW='\033[1;33m'

NC='\033[0m' # No Color

trap 'printf "${YELLOW}\nDownload interrupted. If you re-run the command, you can resume the download from the breakpoint.\n${NC}"; exit 1' INT

display_help() {

cat << EOF

Usage:

hfd <repo_id> [--include include_pattern] [--exclude exclude_pattern] [--hf_username username] [--hf_token token] [--tool aria2c|wget] [-x threads] [--dataset] [--local-dir path]

Description:

Downloads a model or dataset from Hugging Face using the provided repo ID.

Parameters:

repo_id The Hugging Face repo ID in the format 'org/repo_name'.

--include (Optional) Flag to specify a string pattern to include files for downloading.

--exclude (Optional) Flag to specify a string pattern to exclude files from downloading.

include/exclude_pattern The pattern to match against filenames, supports wildcard characters. e.g., '--exclude *.safetensor', '--include vae/*'.

--hf_username (Optional) Hugging Face username for authentication. **NOT EMAIL**.

--hf_token (Optional) Hugging Face token for authentication.

--tool (Optional) Download tool to use. Can be aria2c (default) or wget.

-x (Optional) Number of download threads for aria2c. Defaults to 4.

--dataset (Optional) Flag to indicate downloading a dataset.

--local-dir (Optional) Local directory path where the model or dataset will be stored.

Example:

hfd bigscience/bloom-560m --exclude *.safetensors

hfd meta-llama/Llama-2-7b --hf_username myuser --hf_token mytoken -x 4

hfd lavita/medical-qa-shared-task-v1-toy --dataset

EOF

exit 1

}

MODEL_ID=$1

shift

# Default values

TOOL="aria2c"

THREADS=4

HF_ENDPOINT=${HF_ENDPOINT:-"https://huggingface.co"}

while [[ $# -gt 0 ]]; do

case $1 in

--include) INCLUDE_PATTERN="$2"; shift 2 ;;

--exclude) EXCLUDE_PATTERN="$2"; shift 2 ;;

--hf_username) HF_USERNAME="$2"; shift 2 ;;

--hf_token) HF_TOKEN="$2"; shift 2 ;;

--tool) TOOL="$2"; shift 2 ;;

-x) THREADS="$2"; shift 2 ;;

--dataset) DATASET=1; shift ;;

--local-dir) LOCAL_DIR="$2"; shift 2 ;;

*) shift ;;

esac

done

# Check if aria2, wget, curl, git, and git-lfs are installed

check_command() {

if ! command -v $1 &>/dev/null; then

echo -e "${RED}$1 is not installed. Please install it first.${NC}"

exit 1

fi

}

[[ "$TOOL" == "aria2c" ]] && check_command aria2c

[[ "$TOOL" == "wget" ]] && check_command wget

check_command curl; check_command git; check_command git-lfs

[[ -z "$MODEL_ID" || "$MODEL_ID" =~ ^-h ]] && display_help

if [[ -z "$LOCAL_DIR" ]]; then

LOCAL_DIR="${MODEL_ID#*/}"

fi

if [[ "$DATASET" == 1 ]]; then

MODEL_ID="datasets/$MODEL_ID"

fi

echo "Downloading to $LOCAL_DIR"

if [ -d "$LOCAL_DIR/.git" ]; then

printf "${YELLOW}%s exists, Skip Clone.\n${NC}" "$LOCAL_DIR"

cd "$LOCAL_DIR" && GIT_LFS_SKIP_SMUDGE=1 git pull || { printf "${RED}Git pull failed.${NC}\n"; exit 1; }

else

REPO_URL="$HF_ENDPOINT/$MODEL_ID"

GIT_REFS_URL="${REPO_URL}/info/refs?service=git-upload-pack"

echo "Testing GIT_REFS_URL: $GIT_REFS_URL"

response=$(curl -s -o /dev/null -w "%{http_code}" "$GIT_REFS_URL")

if [ "$response" == "401" ] || [ "$response" == "403" ]; then

if [[ -z "$HF_USERNAME" || -z "$HF_TOKEN" ]]; then

printf "${RED}HTTP Status Code: $response.\nThe repository requires authentication, but --hf_username and --hf_token is not passed. Please get token from https://huggingface.co/settings/tokens.\nExiting.\n${NC}"

exit 1

fi

REPO_URL="https://$HF_USERNAME:$HF_TOKEN@${HF_ENDPOINT#https://}/$MODEL_ID"

elif [ "$response" != "200" ]; then

printf "${RED}Unexpected HTTP Status Code: $response\n${NC}"

printf "${YELLOW}Executing debug command: curl -v %s\nOutput:${NC}\n" "$GIT_REFS_URL"

curl -v "$GIT_REFS_URL"; printf "\n${RED}Git clone failed.\n${NC}"; exit 1

fi

echo "git clone $REPO_URL $LOCAL_DIR"

GIT_LFS_SKIP_SMUDGE=1 git clone $REPO_URL $LOCAL_DIR && cd "$LOCAL_DIR" || { printf "${RED}Git clone failed.\n${NC}"; exit 1; }

for file in $(git lfs ls-files | awk '{print $3}'); do

truncate -s 0 "$file"

done

fi

printf "\nStart Downloading lfs files, bash script:\ncd $LOCAL_DIR\n"

files=$(git lfs ls-files | awk '{print $3}')

declare -a urls

for file in $files; do

url="$HF_ENDPOINT/$MODEL_ID/resolve/main/$file"

file_dir=$(dirname "$file")

mkdir -p "$file_dir"

if [[ "$TOOL" == "wget" ]]; then

download_cmd="wget -c \"$url\" -O \"$file\""

[[ -n "$HF_TOKEN" ]] && download_cmd="wget --header=\"Authorization: Bearer ${HF_TOKEN}\" -c \"$url\" -O \"$file\""

else

download_cmd="aria2c --console-log-level=error -x $THREADS -s $THREADS -k 1M -c \"$url\" -d \"$file_dir\" -o \"$(basename "$file")\""

[[ -n "$HF_TOKEN" ]] && download_cmd="aria2c --header=\"Authorization: Bearer ${HF_TOKEN}\" --console-log-level=error -x $THREADS -s $THREADS -k 1M -c \"$url\" -d \"$file_dir\" -o \"$(basename "$file")\""

fi

[[ -n "$INCLUDE_PATTERN" && ! "$file" == $INCLUDE_PATTERN ]] && printf "# %s\n" "$download_cmd" && continue

[[ -n "$EXCLUDE_PATTERN" && "$file" == $EXCLUDE_PATTERN ]] && printf "# %s\n" "$download_cmd" && continue

printf "%s\n" "$download_cmd"

urls+=("$url|$file")

done

for url_file in "${urls[@]}"; do

IFS='|' read -r url file <<< "$url_file"

printf "${YELLOW}Start downloading ${file}.\n${NC}"

file_dir=$(dirname "$file")

if [[ "$TOOL" == "wget" ]]; then

[[ -n "$HF_TOKEN" ]] && wget --header="Authorization: Bearer ${HF_TOKEN}" -c "$url" -O "$file" || wget -c "$url" -O "$file"

else

[[ -n "$HF_TOKEN" ]] && aria2c --header="Authorization: Bearer ${HF_TOKEN}" --console-log-level=error -x $THREADS -s $THREADS -k 1M -c "$url" -d "$file_dir" -o "$(basename "$file")" || aria2c --console-log-level=error -x $THREADS -s $THREADS -k 1M -c "$url" -d "$file_dir" -o "$(basename "$file")"

fi

[[ $? -eq 0 ]] && printf "Downloaded %s successfully.\n" "$url" || { printf "${RED}Failed to download %s.\n${NC}" "$url"; exit 1; }

done

printf "${GREEN}Download completed successfully.\n${NC}"两个参数分别是模型id和线程数,手动修改成自己想用的。

参考文章:

评论区